Scaling libp2p GossipSub for Large Messages: An Evaluation of Performance Improvement Proposals

The original GossipSub design emphasizes robustness, with less focus on message sizes. However, emerging use cases—such as Ethereum's EIP-4844 and data availability sampling (DAS) require rapid propagation of high data volumes, often in the form of large messages. Many ongoing research efforts attempt to find solutions for efficiently forwarding large messages through the GossipSub network. This post provides a concise overview and performance evaluation of some research initiatives aimed at improving GossipSub to meet the performance needs of modern P2P networks.

Overview

For each subscribed topic, GossipSub nodes maintain a full-message mesh of peers with a target degree , bounded by lower and upper thresholds and , respectively. In parallel, a metadata-only (gossip) mesh includes additional peers for metadata exchange. All messages flow through the full-message mesh, and metadata flows through the gossip mesh.

The metadata contains IHAVE messages, announcing IDs of seen messages. Upon receiving an IHAVE announcement about an unseen message ID, a receiver responds with an IWANT request to retrieve the announced message. IHAVE announcements serve multiple purposes:

- Offer additional resilience in situations involving non-conforming peers or network partitions.

- Speed up message propagation by allowing far-off peers to fetch overdue messages.

Since GossipSub v1.1 [1], replying to IWANT requests is optional, to safeguard honest peers from adversaries. This change encourages peers to make redundant IWANT requests for the same message. While this arrangement works well for small messages, it can be inefficient for bigger ones. This inefficiency arises from an increase in duplicates and a higher number of IWANT requests due to longer transmission times for large messages. As a result, we observe a significant rise in bandwidth utilization and message dissemination times across the network.

IDONTWANT messages in GossipSub v1.2 [2] help reduce some duplicates. However, further efforts are necessary to mitigate this problem [3, 4]. That is why many recent works focus on improving GossipSub's performance when handling large messages [5, 6, 7, 8, 9, 10, 11, 12].

In this post, we evaluate the push-pull phase transition (PPPT) mechanism [10], the GossipSub v1.4 proposal [11, 5], and the GossipSub v2.0 proposal [12] for performance against Gossipsub v1.2. For this purpose, we implement minimal proof-of-concept (PoC) versions of these protocols in nim-libp2p [13] and use the shadow simulator to provide performance evaluation results.

We begin by discussing the issues of message dissemination times and duplicate messages in GossipSub. Next, we provide a brief overview of the proposals under review, followed by the experiments and findings from our performance evaluations.

Message Transfer Time and Duplicates

Assuming uniform link characteristics, message dissemination to full-message mesh concludes in time, where , with , , and being the message size, data rate, and link latency, respectively.

This simplifies network-wide dissemination time to , with indicating the number of hops along the longest path. This implies that a tenfold increase in message size results in an eightyfold rise in for a mesh with , which accumulates across hops. This leads to two fundamental problems:

-

A longer contention interval () increases the chance that peers receive the same message from multiple mesh members during that interval,

leading to redundant transmissions and more duplicates. Reducing inadvertently increases — potentially slowing propagation overall. -

Peers are unaware of ongoing message receptions and may generate redundant IWANT requests for the messages they are already receiving. Most of these requests are entertained by early message receivers, which increases message dissemination time by increasing the workload at peers situated along the optimal path.

Talking about duplicates, a network comprising peers, each with a degree , has a total of edges (links), as every link connects two peers. Assuming that a message traverses every link exactly once, we get at least transmissions.

Only transmissions are necessary for delivering a message to all peers. As a result, we get duplicates in the network. We can simplify average duplicates received by a single peer to . Here, represents the lower bound on average duplicates because we assume that the send and receive operations are mutually exclusive. This assumption requires that message transmission times (and link latencies) are so small that no two peers simultaneously transmit the same message to each other.

However, a large message can noticeably increase the contention interval, which increases the likelihood that many peers will simultaneously transmit the same message to each other.

Authors in [14] explore the upper bound () on duplicates. They argue that a node can forward a received message to a maximum of peers while the original publisher sends it to peers. As a result, we get transmissions in the network. Only transmissions are necessary to deliver a message to all peers. Remaining transmissions are duplicates, which simplifies the upper bound on average duplicates received by each peer to . This rise indicates that larger messages can lead to more duplicates due to longer contention intervals. It is essential to highlight that the impact of IWANT/IDONTWANT messages is not considered in computations above.

Protocols Considered

Push-Pull Phase Transition (PPPT)

In PPPT, authors argue that most redundant transmissions occur during the later stages of message propagation. As a message traverses through the network, the peers forwarding the message should gradually reduce the number of mesh members that directly receive the message (push) and send immediate IHAVE announcements to the remaining peers in the mesh. The remaining mesh members can fetch any missing messages using IWANT requests (Pull). The authors also suggest two strategies to estimate the message propagation stage:

-

Include a hop count in the message header to identify the number of hops traversed by that message. When a peer forwards a received message, it performs a pull operation for a subset of mesh members that equals the specified hop count and a push operation for the remaining mesh members.

-

Infer the message propagation stage by looking into the number of received IHAVE announcements and duplicates for that message. Use this information to choose a balance between pull-based and push-based message forwarding.

The authors suggest that instead of simultaneously pushing a message to the selected peers, sequentially initiating transmission to each peer after a short delay enhances the likelihood of timely receiving a higher number of IDONTWANT requests.

Key Considerations and PoC Implementation

The use of hop count is a more effective and straightforward method for identifying the message propagation stage than relying on the duplicate count. However, this approach may compromise the publisher's anonymity and reveal information about the publisher and its mesh members. Additional due diligence may be needed to address these issues.

In the PoC implementation [15], we use hop count to determine the message propagation stage. When forwarding a message, every peer selects a subset of mesh members equal to the advertised hop count for pull operation and forwards the message to the remaining mesh members. If the advertised hop count exceeds the number of mesh members chosen for message forwarding, the sender relays the message to all selected peers.

GossipSub v1.4 Proposal

GossipSub v1.4 proposal considers longer large-message transfer times () as contention intervals and argues that most duplicates occur during these intervals for two fundamental reasons:

-

Peers are unaware of ongoing message receptions and may generate redundant IWANT requests for messages they are already receiving.

-

Peers can send IDONTWANT announcements only after receiving the entire message. However, a large contention interval increases the likelihood that many redundant transmissions will start before IDONTWANT messages are issued.

GossipSub v1.4 proposal eliminates contention interval with help from two new control messages: PREAMBLE and IMRECEIVING. A PREAMBLE precedes every large message transmission. Upon receiving a preamble, a peer learns about the messages it is receiving and performs two actions:

-

Notify mesh members about ongoing message reception using an IMRECEIVING announcement. On receiving an IMRECEIVING announcement from a peer, mesh members defer sending the announced message to that peer.

-

Defer IWANT requests for messages that are currently being received. Peers also limit the outstanding IWANT requests for any message to one.

Key Considerations and PoC Implementation

The use of PREAMBLE/IMRECEIVING addresses the limitation of IDONTWANT messages. For instance, consider a peer begins receiving a message at time . It can transmit IDONTWANT only after receiving , i.e., at time . Therefore, it can not cancel any duplicate receptions of that start before . In contrast, IMRECEIVING announcements for start at , where denotes PREAMBLE processing time and satisfies . As a result, peer can eliminate all duplicate receptions of that start after , which noticeably reduces duplicates.

The use of PREAMBLE also allows deferring IWANT requests for messages we are already receiving, which can also improve message dissemination time by reducing the workload on peers along the optimal message forwarding path.

It is worth mentioning that a malicious peer can exploit this approach by sending a PREAMBLE and never completing (or deliberately delaying) the promised message transfer. The optional safety strategy in GossipSub v1.4 proposal suggests using a peer score threshold for PREAMBLE processing and a behavior penalty for broken promises. A timeout strategy helps recover such messages.

It is essential to mention that sending and processing of PREAMBLE and IMRECEIVING messages is optional. This flexibility allows for the use of custom safety strategies in various implementations. For example, the ongoing production-grade implementation of GossipSub v1.4 in nim-libp2p allows peers to ignore PREAMBLEs unless they come from mesh members with higher data rates (bandwidth estimation becomes trivial with PREAMBLEs) and good peer scores. This implementation also lets peers choose between a push or pull strategy for handling broken promises.

For the performance evaluations in this post, we utilize the PoC implementation of GossipSub v1.4 [16]. A complete, production-grade version is currently undergoing testing and validation [17].

GossipSub v2.0 Proposal

GossipSub v2.0 introduces a hybrid method for message dissemination that combines both push and pull strategies through two new control messages: IANNOUNCE and INEED. These messages are analogous to IHAVE and IWANT messages, respectively. However, IANNOUNCE messages are issued to the mesh members immediately after validating a received message without waiting for the heartbeat interval. Similarly, INEED requests are made exclusively to mesh members, and a peer generates only one INEED request for a received message.

The balance between push and pull approaches is determined by the parameter. On receiving a message, a peer forwards it to mesh members and sends IANNOUNCE messages to the remaining mesh peers. On receiving an IANNOUNCE for an unseen message, a peer can request it using an INEED message.

Key Considerations and PoC Implementation

The parameter governs the balance between push and pull operations. Setting results in a pull-only operation, which can eliminate duplicates at the cost of increased message dissemination time. In contrast, setting to zero reverts to standard GossipSub v1.2 operation. The authors suggest setting to moderately decrease dissemination time while incurring only a small number of duplicate transmissions.

It is important to note that malicious peers can exploit this approach by delaying or entirely omitting responses to INEED requests. Similarly, sending INEED requests to suboptimal or overwhelmed peers can further increase message dissemination time. The authors propose using a timeout strategy and negative peer scoring to address these issues. If a message transfer does not complete within the specified interval, the receiver decreases the sender's peer score and issues a new INEED request to an alternative mesh member.

For the performance evaluations in this post, we utilize the PoC implementation of GossipSub v2.0 [18] from nim-libp2p. We set and allow any peer to send a single IWANT request for a message, only if it has not previously sent an INEED request for the same message.

Experiments

We conducted a series of experiments under various configurations to evaluate the performance of

the GossipSub v1.4 proposal, the PPPT approach, and the GossipSub v2.0 proposal

against the baseline GossipSub v1.2 protocol.

To support these evaluations, we extended the nim-libp2p implementation to include minimal PoC implementations of the considered protocols

[16,

15,

18]

and used the Shadow simulator [19]

to carry out performance evaluations.

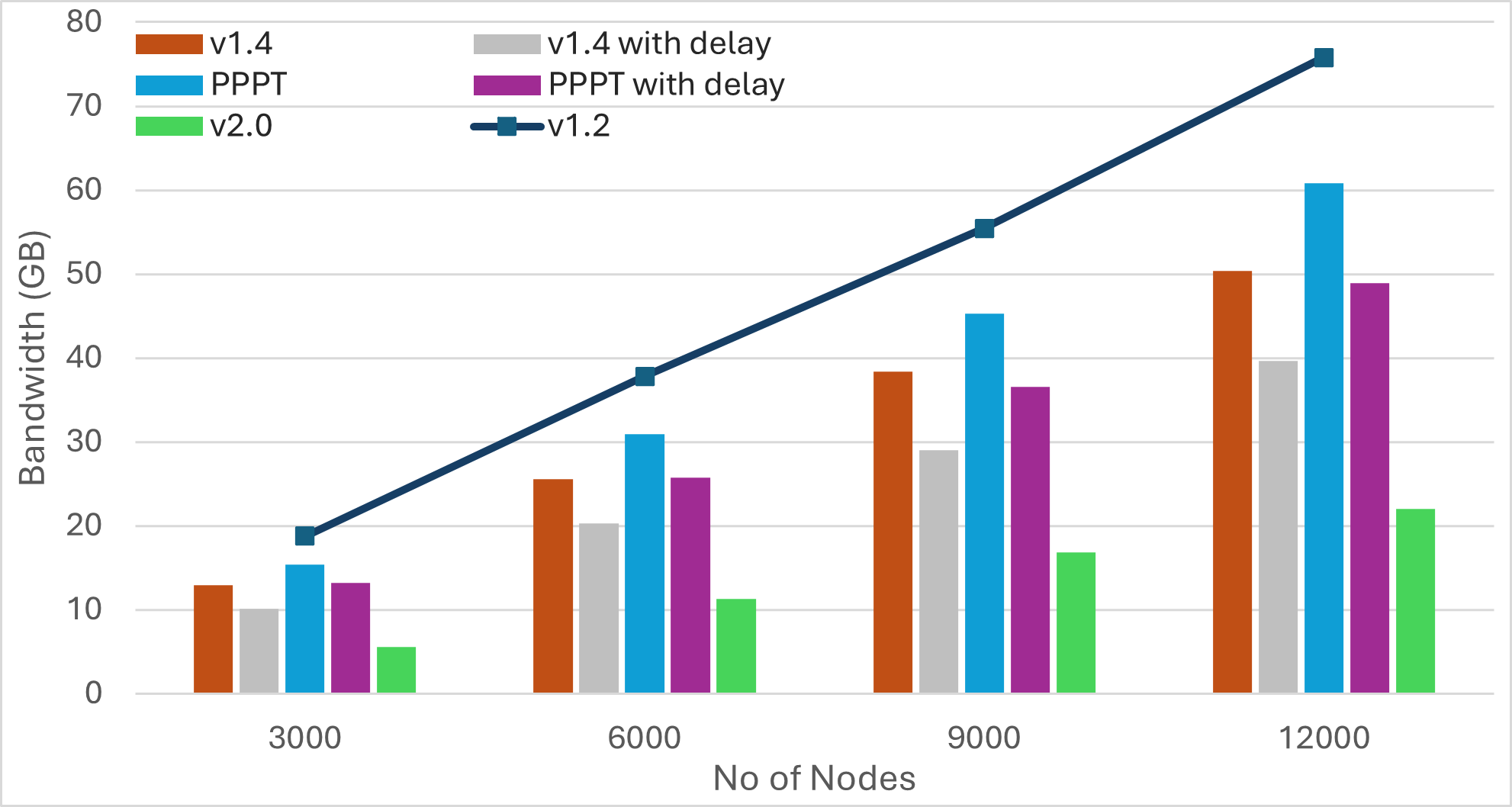

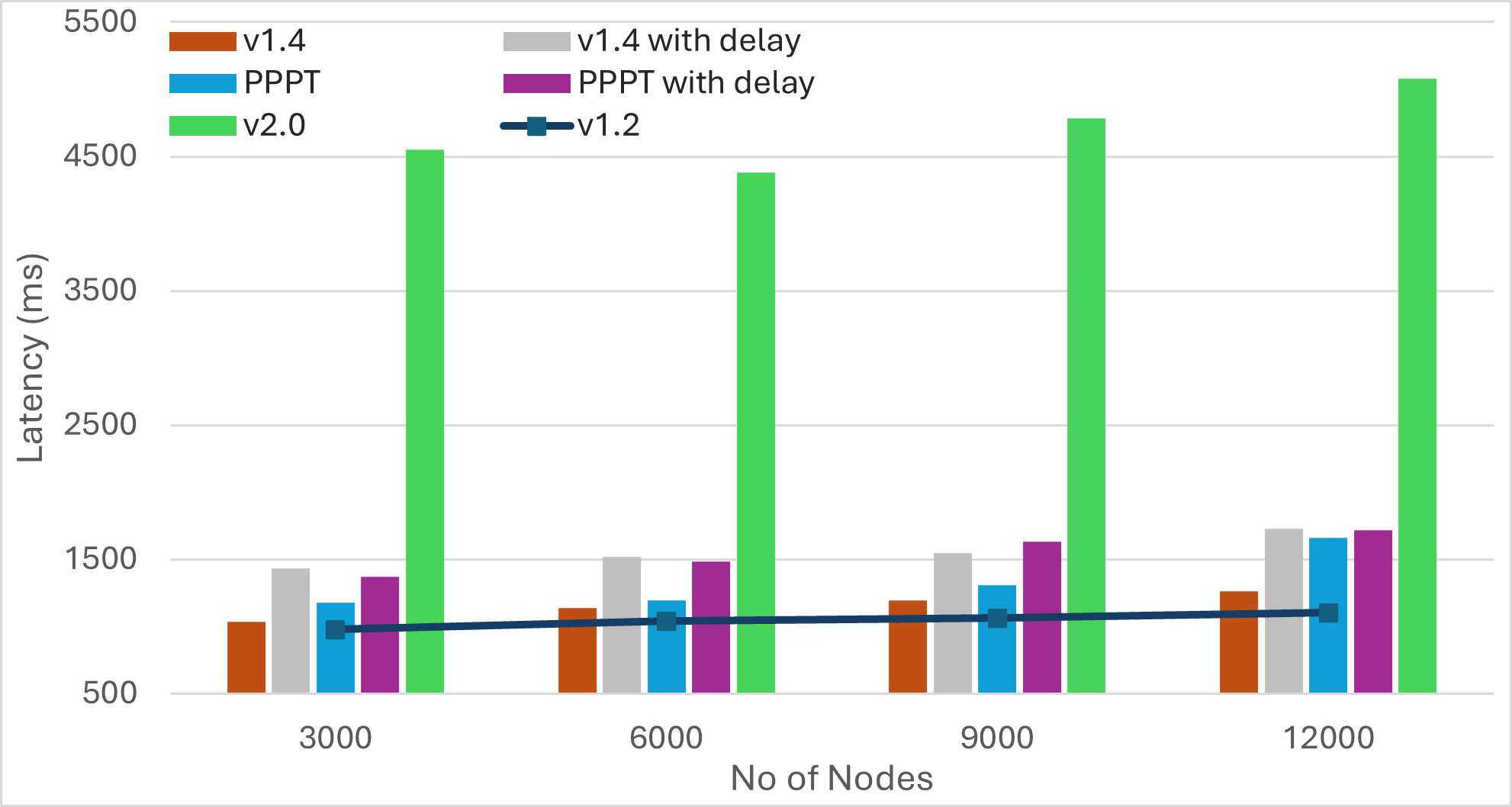

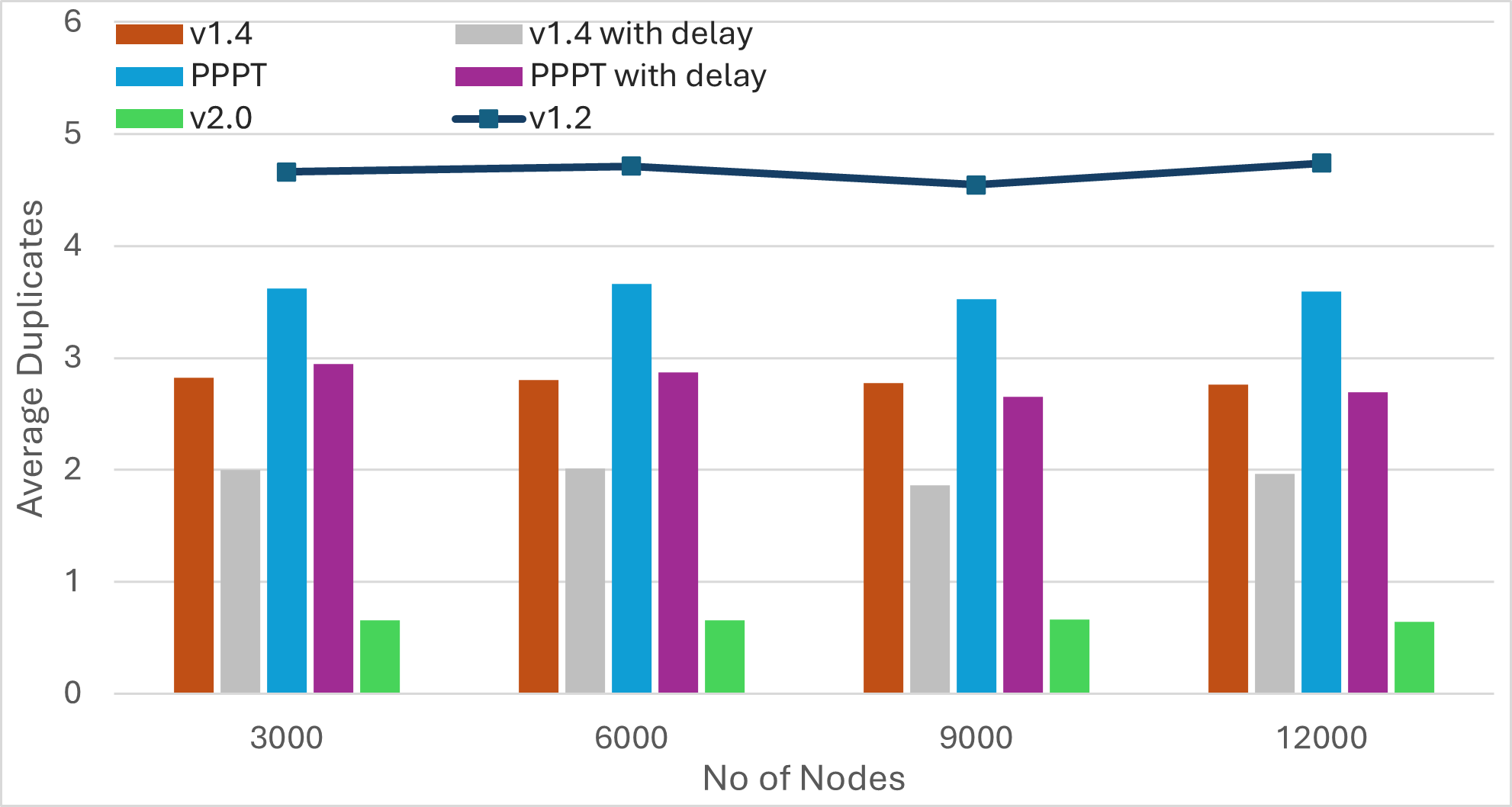

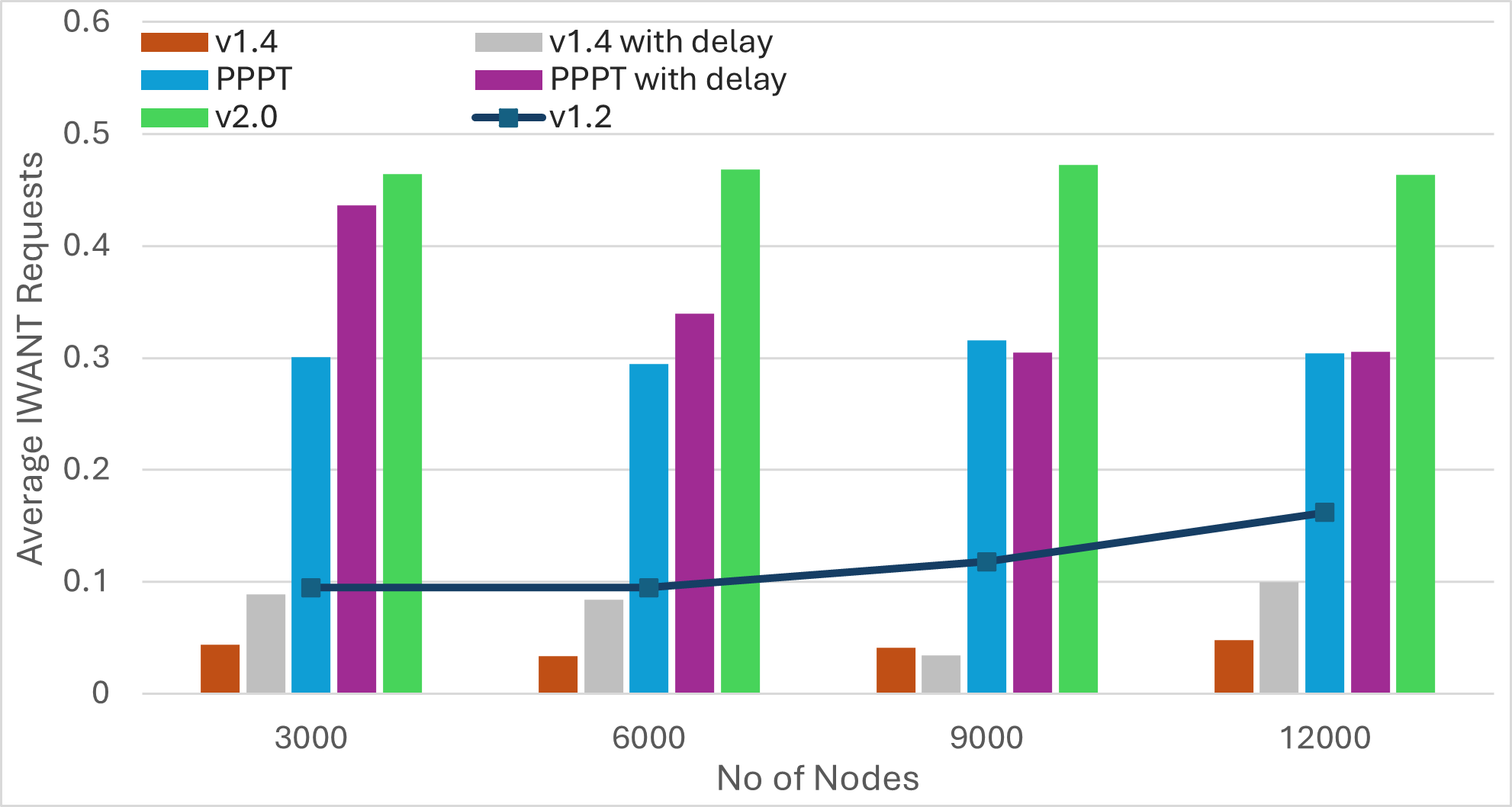

For GossipSub v1.4 and PPPT, we also report results from delayed forwarding, where peers introduce a short delay before relaying a message to every mesh member. This delay helps reduce the number of redundant transmissions by increasing the likelihood of timely receiving a higher number of IDONTWANT notifications.

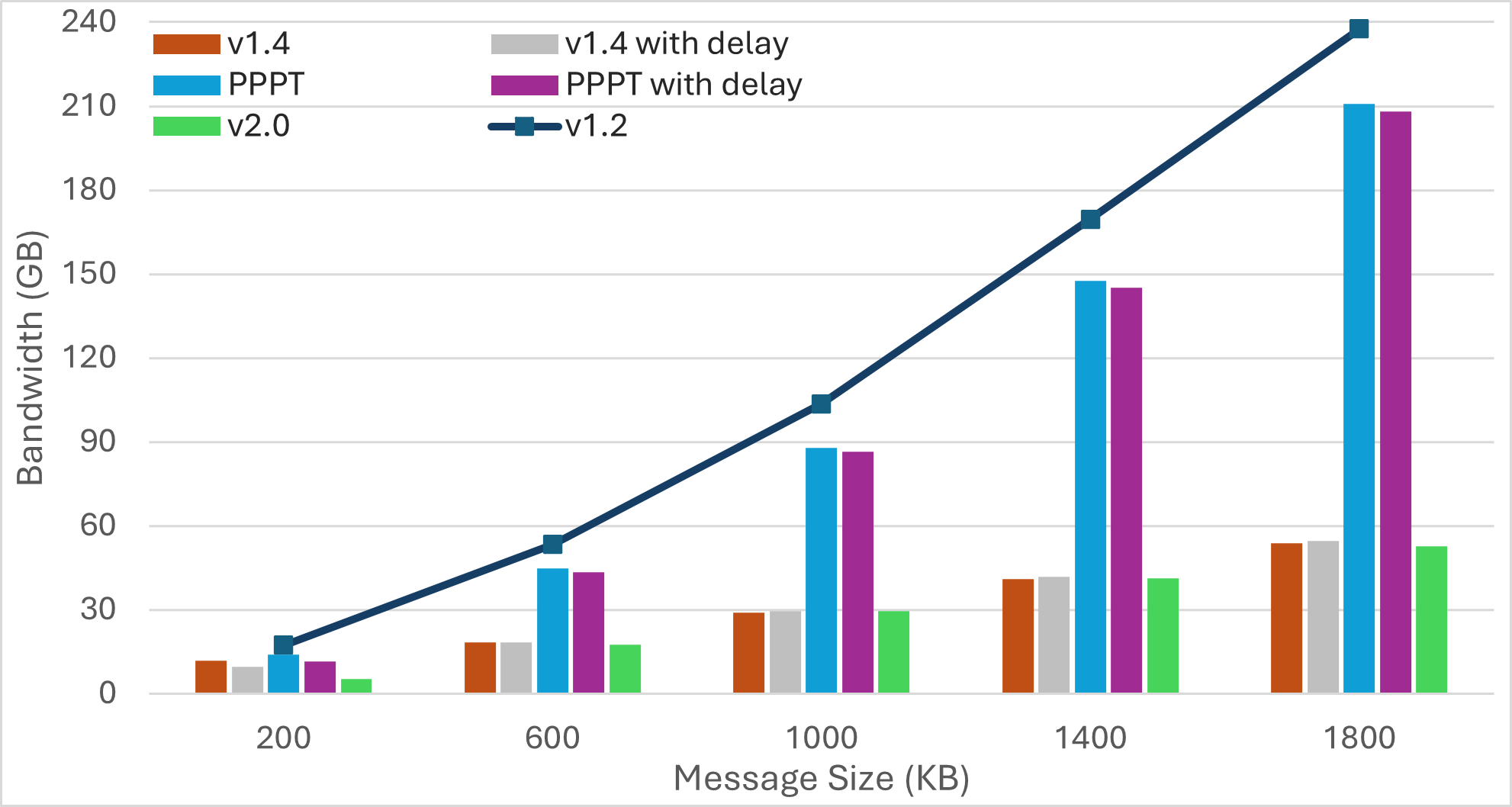

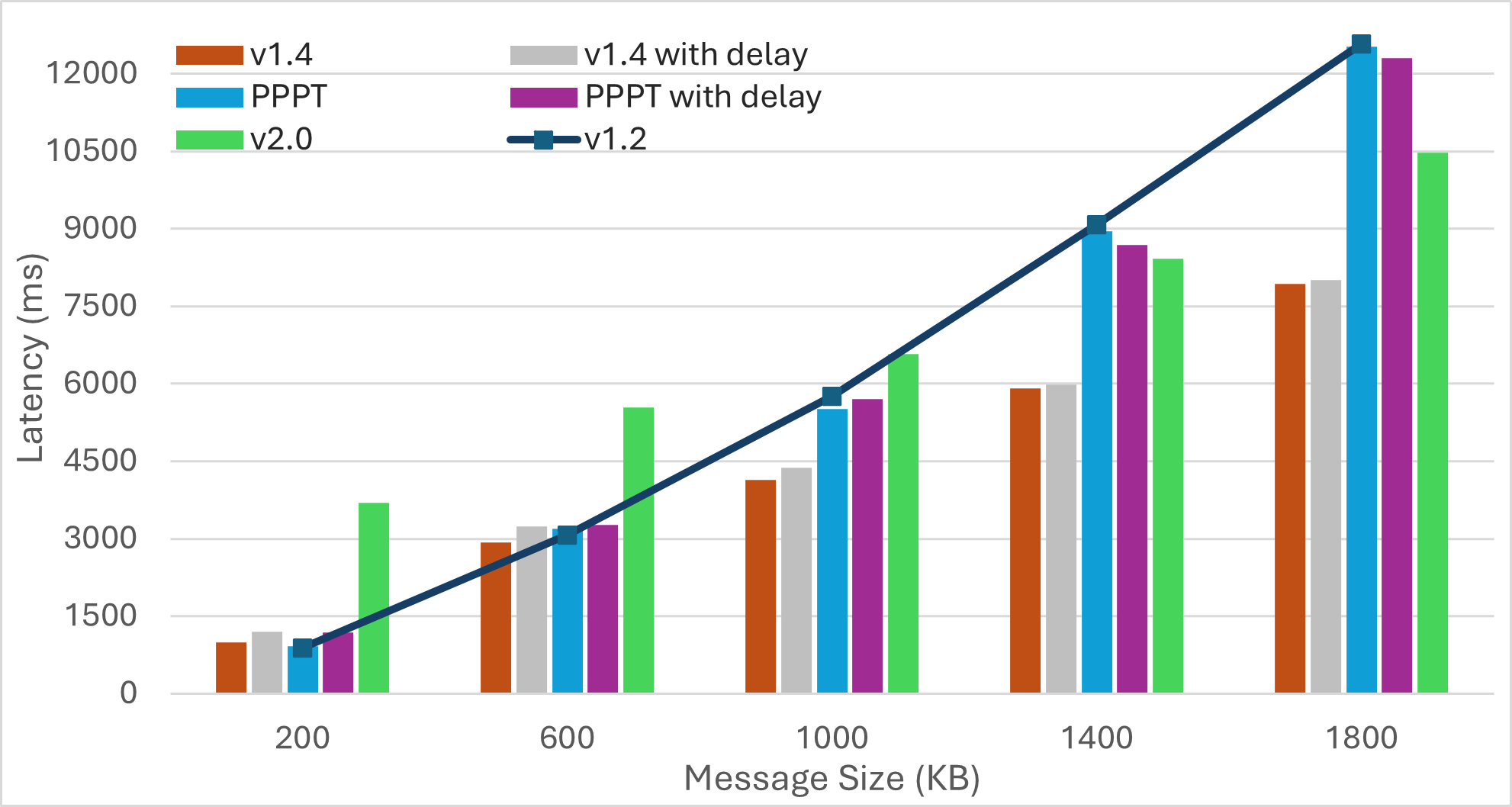

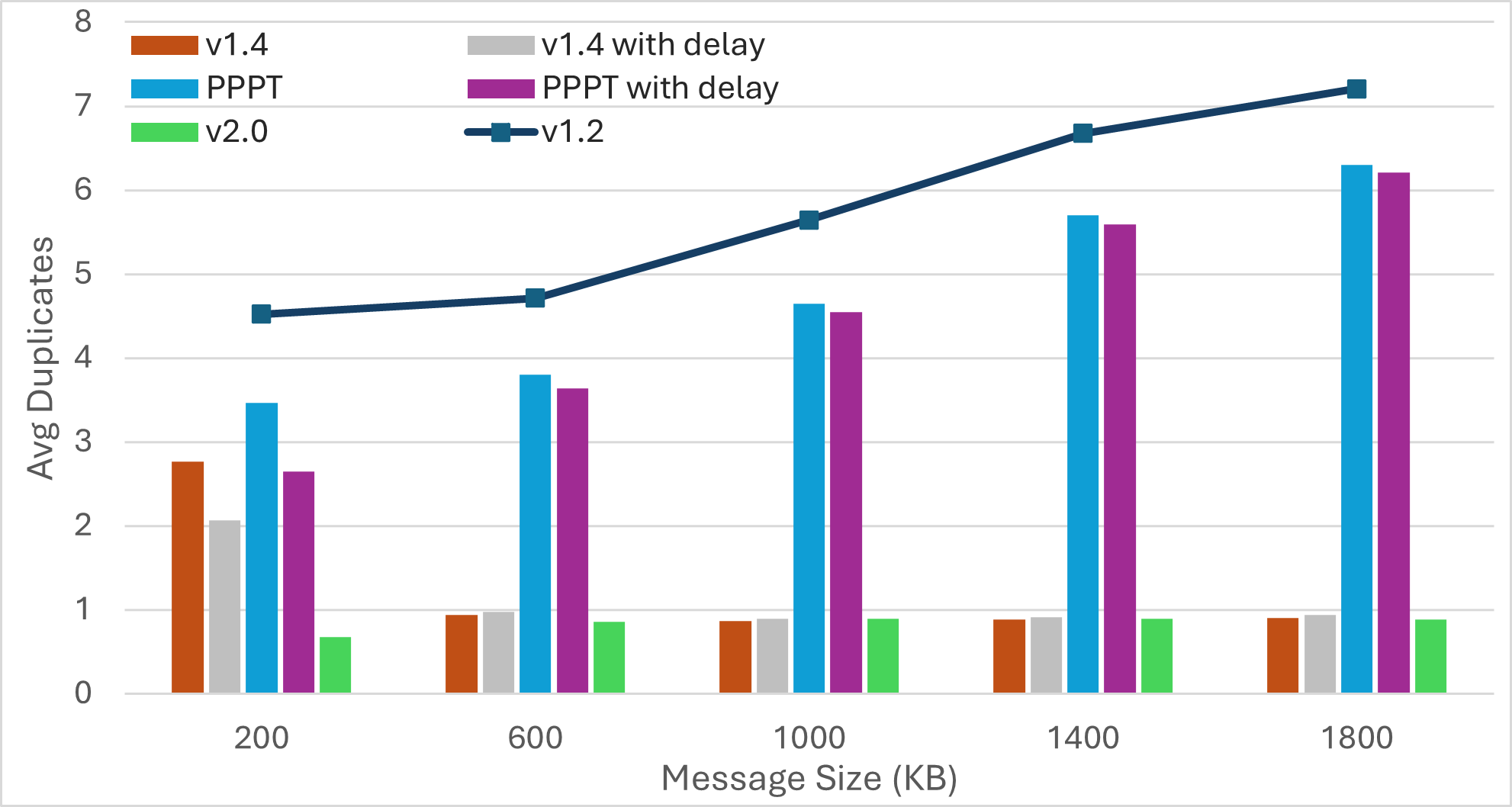

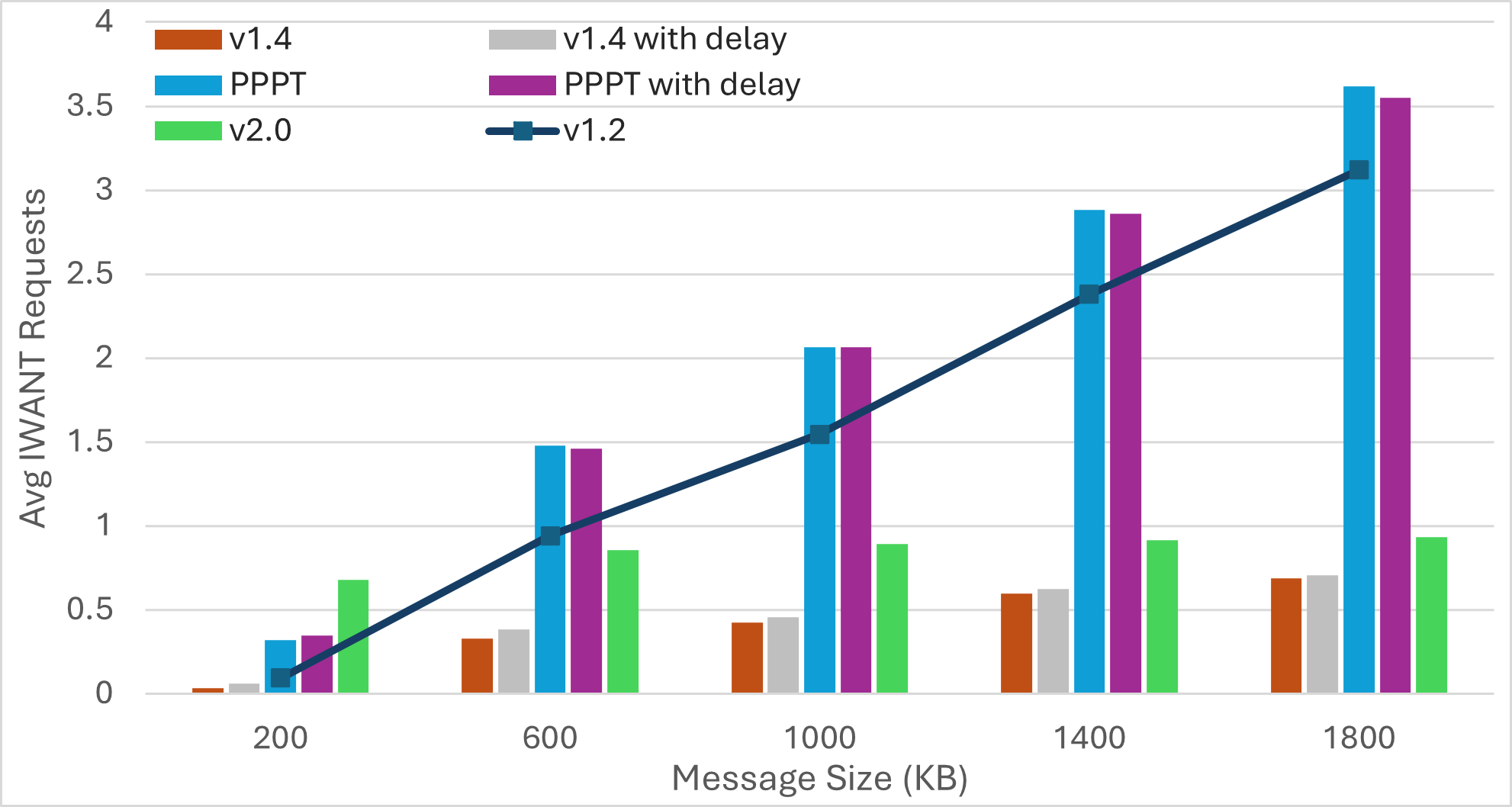

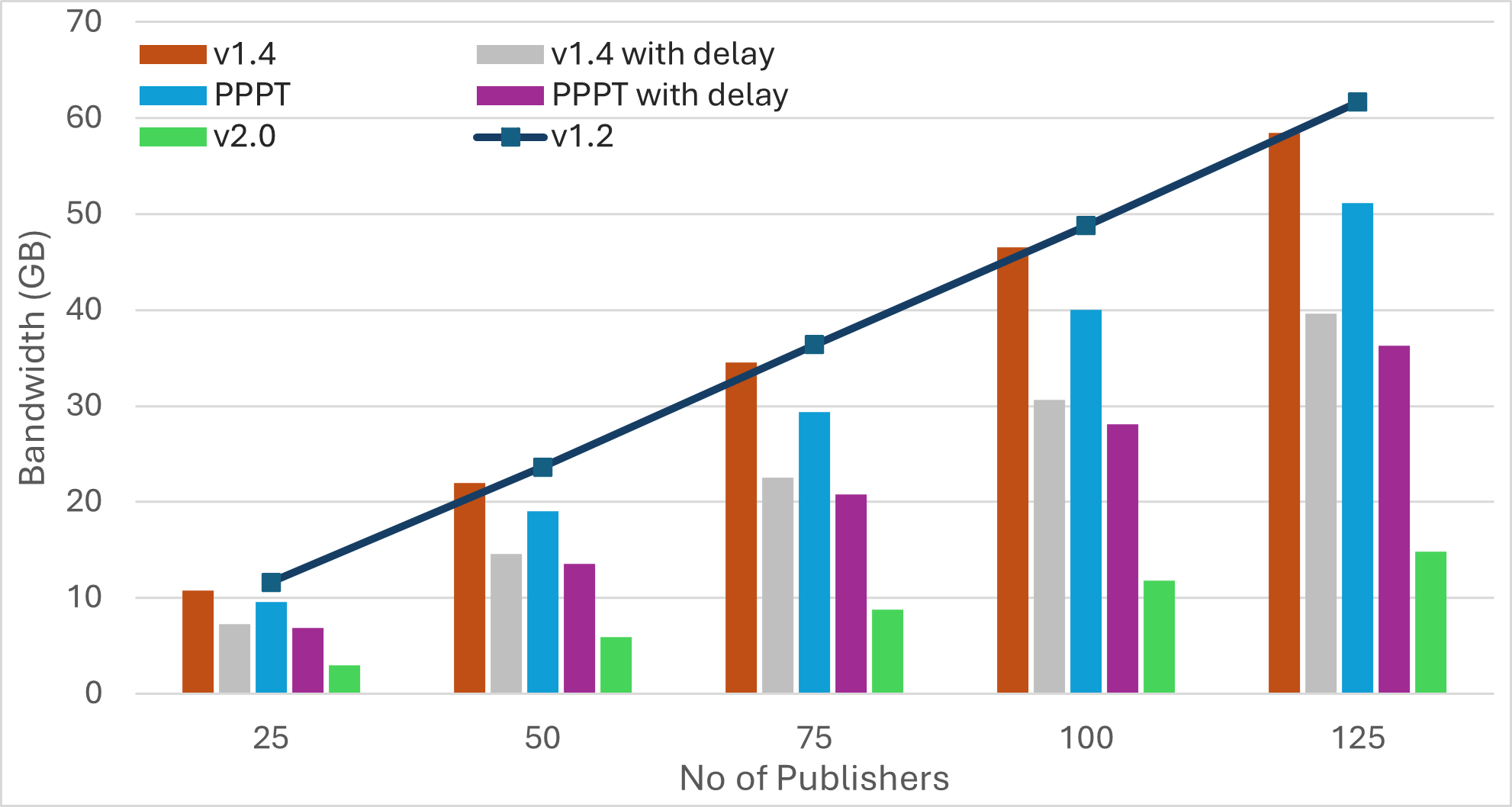

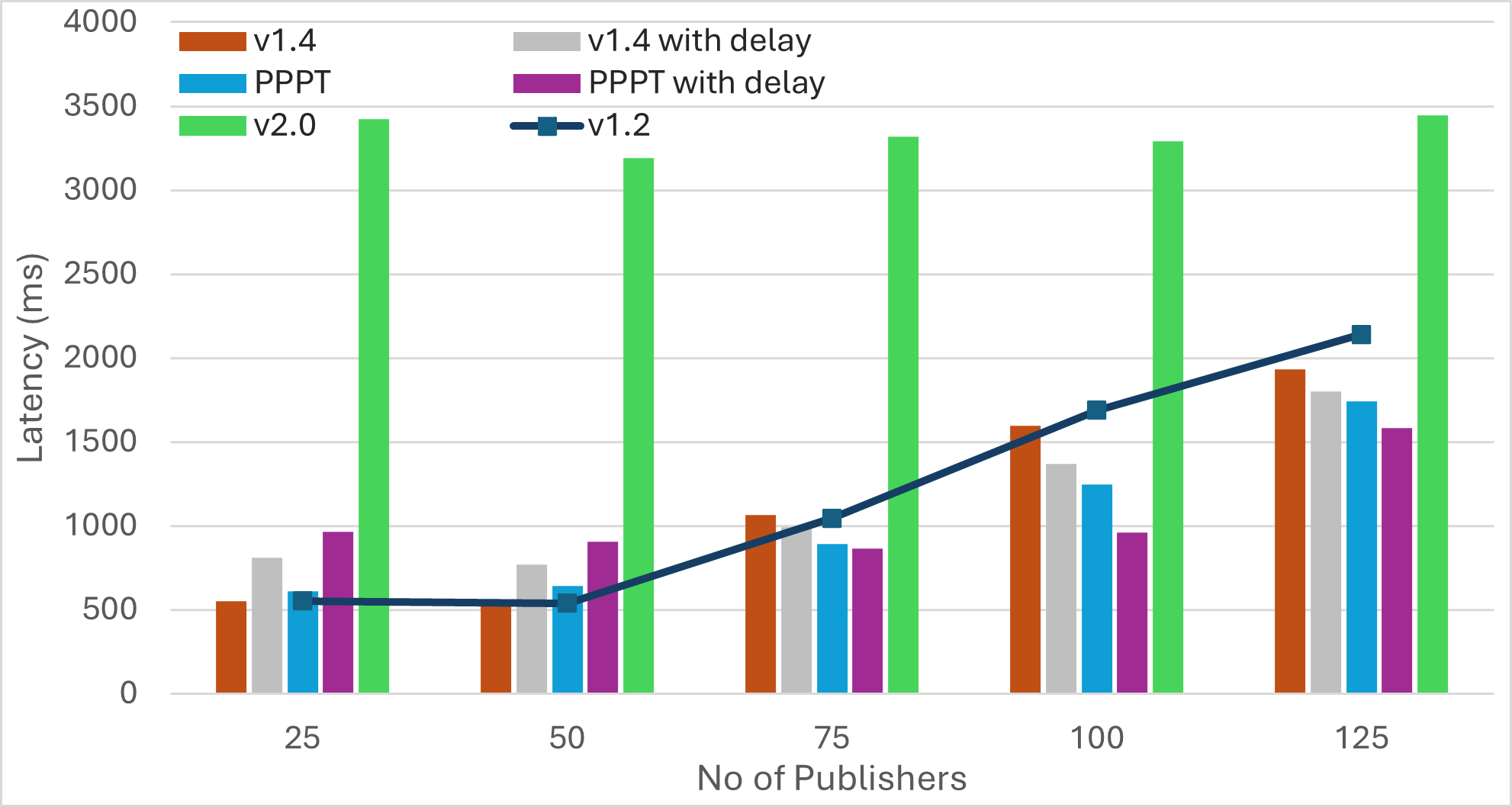

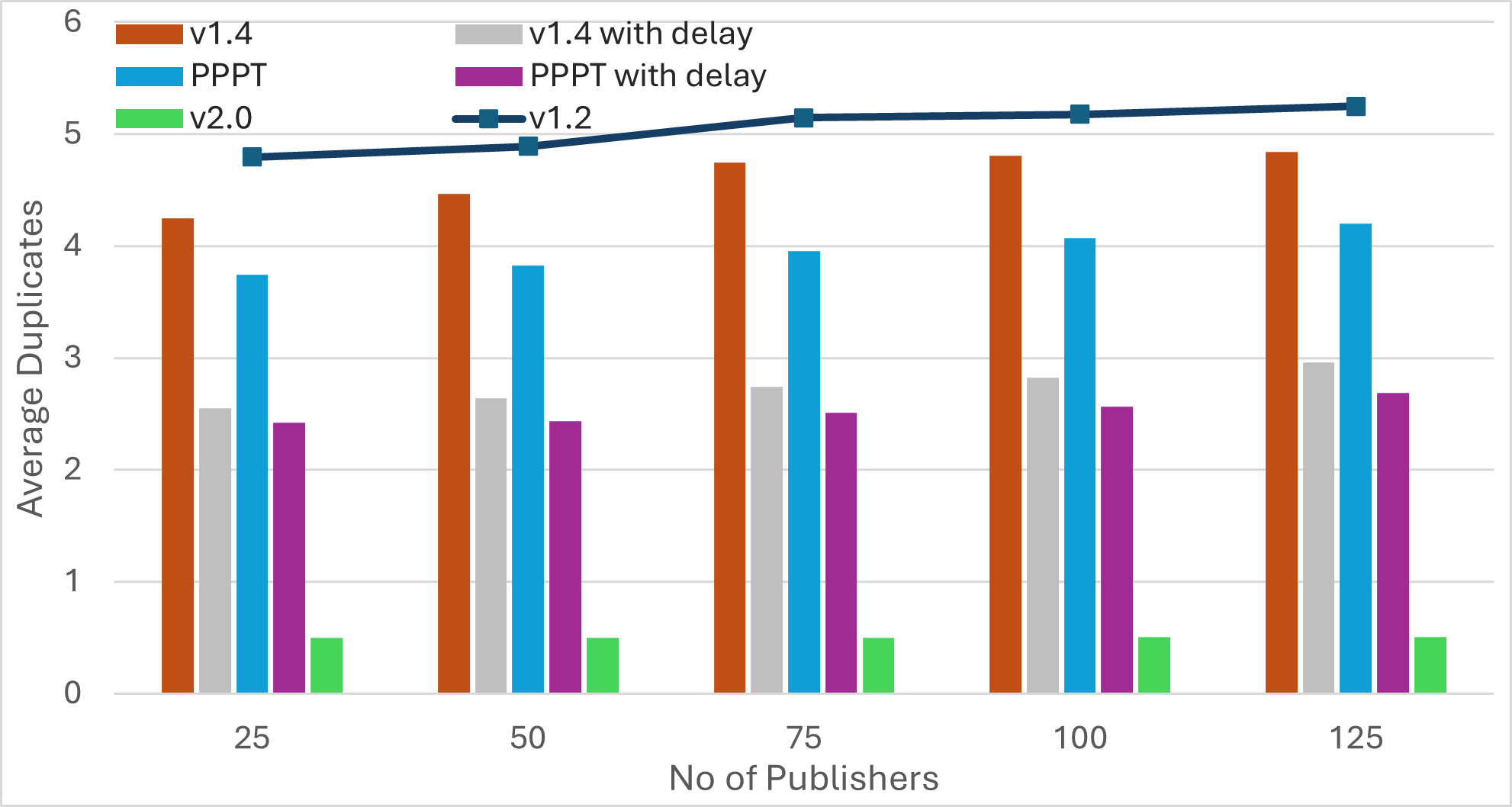

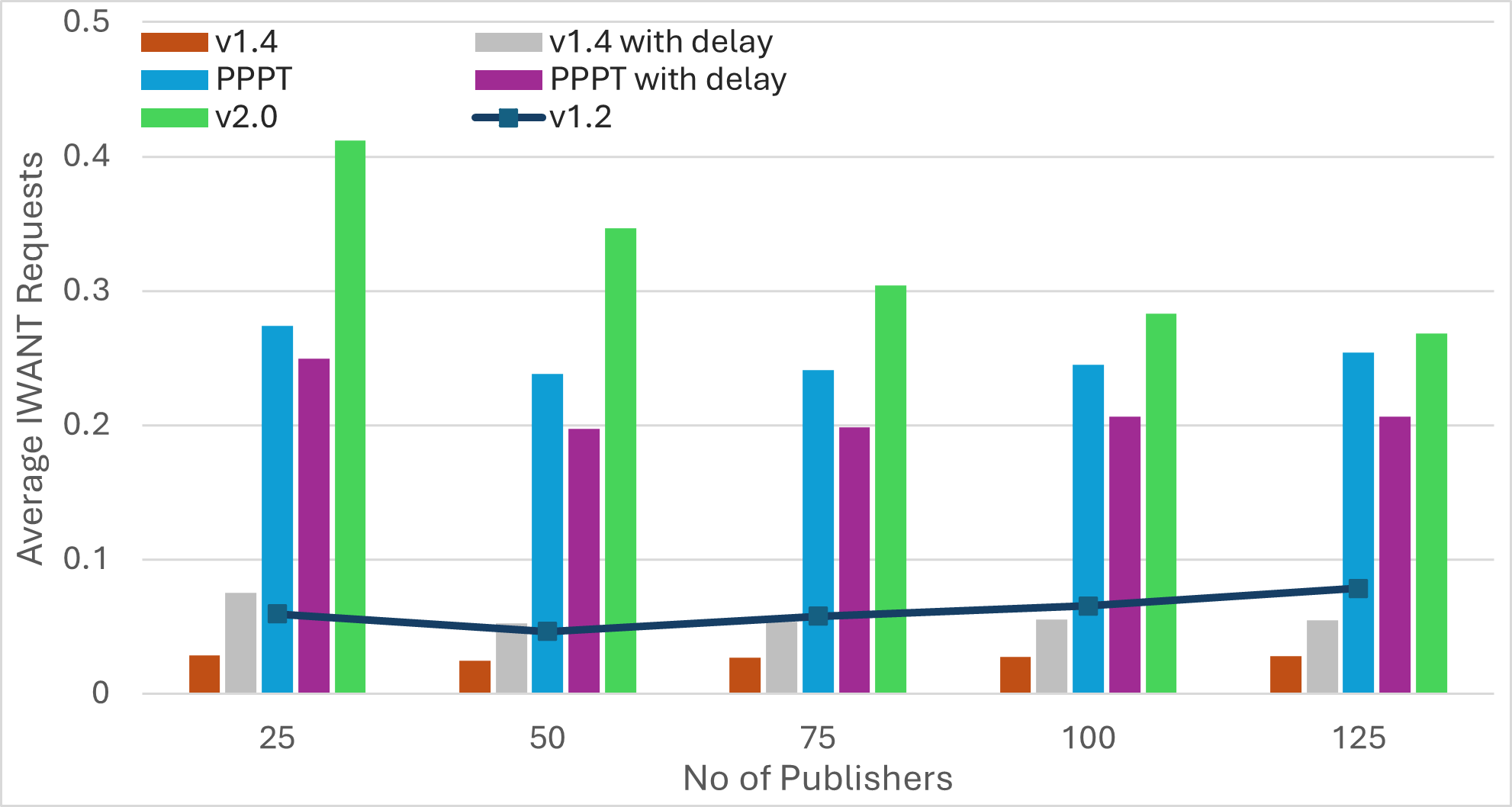

We evaluate performance using network-wide message dissemination time (latency), network-wide bandwidth utilization (bandwidth), and the average number of duplicates received by a peer for every transmitted message. We also report the average number of IWANT requests transmitted by a peer for a single message.

For each experiment, we transmit multiple messages in the network. We average the network-wide dissemination time for these messages to report latency. Bandwidth refers to the total volume of traffic in the network, encompassing control messages and data transmissions (including duplicates and IWANT replies). A peer usually receives multiple copies of any transmitted message. Excluding the first received message, all copies are duplicates. We compute average duplicates received by a peer as where and denote the number of peers and the number of transmitted messages, respectively, and represents the number of duplicates received by peer for message . A similar mechanism computes average IWANT requests.

Three simulation scenarios are considered:

-

Scenario 1: The number of publishers and message size are kept constant while the network size gradually increases.

-

Scenario 2: The number of publishers and the network size remain constant while the message size gradually increases.

-

Scenario 3: The number of nodes and message size remain constant while the number of publishers gradually increases.

In all experiments, we transmit multiple messages such that every publisher sends exactly one message to the network. After a publisher transmits a message, each subsequent publisher waits for a specified interval (inter-message delay) before sending the next message.

Rotating publishers ensures that every message traverses a different path, which helps achieve fair performance evaluation. On the other hand, changing inter-message delays allows for creating varying traffic patterns. A shorter inter-message delay implies more messages can be in transit simultaneously, which helps evaluate performance against large message counts. A longer delay ensures every message is fully disseminated before introducing a new message. Similarly, increasing message size stresses the network. As a result, we evaluate performance across a broader range of use cases.

The simulation details are presented in the tables below. The experiments are conducted using the shadow simulator. We uniformly set peer bandwidths and link latencies between 40-200 Mbps and 40-130 milliseconds in five variations.

Table 1: Simulation Scenarios.

| Experiment | No. of Nodes | No. of Publishers | Message Size (KB) | Inter-Message Delay (ms) |

|---|---|---|---|---|

| Scenario 1 | 3000, 6000, 9000, 12000 | 7 | 150 | 10000 |

| Scenario 2 | 1500 | 10 | 200, 600, 1000, 1400, 1800 | 10000 |

| Scenario 3 | 1500 | 25, 50, 75, 100, 125 | 50 | 50 |

Table 2: Simulation Parameters.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 8 | 6 | ||

| 6 | 12 | ||

| Gossip factor | 0.05 | Muxer | yamux |

| Heartbeat interval | 1000 ms | Floodpublish | false |

| Peer Bandwidth | 40-200 Mbps | Link Latency | 40-130ms |

| GossipSub v2.0 | 7 | Forward delay in PPPT/v1.4 with delay | 35 ms |

Results

Scenario1: Increasing Network Size

|  |  |  |

|---|---|---|---|

| Bandwidth | Latency | Average Duplicates | Average IWANT Requests |

Scenario2: Increasing Message Size

|  |  |  |

|---|---|---|---|

| Bandwidth | Latency | Average Duplicates | Average IWANT Requests |

Scenario3: Increasing Number of Publishers

|  |  |  |

|---|---|---|---|

| Bandwidth | Latency | Average Duplicates | Average IWANT Requests |

Findings

-

The number of IWANT requests increases with the message size. Limiting ongoing IWANT requests for each message to one can be beneficial. Additionally, the use of message PREAMBLEs can help eliminate IWANT requests for messages that are already being received.

-

Pull-based approaches can substantially reduce bandwidth utilization, but may result in much longer message dissemination times. However, these approaches can achieve simultaneous propagation of multiple messages by implicitly rotating among outgoing messages. As a result, increasing the number of messages yields similar dissemination times.

-

Transition from push to pull operation during the later stages of message propagation can reduce bandwidth consumption, without compromising latency. However, determining the propagation stage is challenging. Methods like hop counts may compromise anonymity, while using IHAVE announcements can be misleading. For instance, in the case of large messages, peers may receive IHAVE announcements much earlier than the actual message spreads through the network.

-

Push-based approaches achieve the fastest message dissemination but often produce a higher number of duplicate messages. Employing mechanisms like PREAMBLE/IMRECEIVING messages for guided elimination of duplicate messages can significantly reduce bandwidth consumption. This reduction not only minimizes redundant transmissions but also decreases the overall message dissemination time by lessening the workload on peers located along optimal message forwarding paths.

Please feel free to join the discussion and leave feedback regarding this post in the VAC forum.

References

[1] GossipSub v1.1 Specifications

[2] GossipSub v1.2 Specifications

[3] Number of Duplicate Messages in Ethereum’s Gossipsub Network

[4] Impact of IDONTWANT in the Number of Duplicates

[5] PREAMBLE and IMRECEIVING for Improved Large Message Handling

[6] Staggering and Fragmentation for Improved Large Message Handling

[7] GossipSub for Big Messages

[8] FullDAS: Towards Massive Scalability with 32MB Blocks and Beyond

[9] Choke Extension for GossipSub

[10] PPPT: Fighting the GossipSub Overhead with Push-Pull Phase Transition

[11] GossipSub v1.4 Specifications Proposal

[12] GossipSub v2.0 Specifications Proposal

[13] Libp2p Implementation in nim

[14] The Streamr Network: Performance and Scalability

[15] PPPT: PoC Implementation in nim-libp2p

[16] GossipSub v1.4: PoC Implementation in nim-libp2p

[17] GossipSub v1.4: Production-Grade Implementation in nim-libp2p